To bridge this gap, the DIS group has released a dynamic point cloud dataset that depicts humans interacting in social eXtended Reality (XR) settings. In particular, audio-visual data (RGB + Depth + Infrared + synchronized Audio) for a total of 45 unique sequences of people performing scripted actions, was captured and released. The screenplays for the human actors were devised so as to simulate a variety of common use cases in social XR, namely, (i) Education and training, (ii) Healthcare, (iii) Communication and social interaction, and (iv) Performance and sports. Moreover, diversity in gender, age, ethnicities, materials, textures and colors was additionally considered.

The capturing system was composed of commodity hardware to better resemble realistic setups that are easier to replicate. It complements existing datasets, since the latest versions of commercial depth sensing devices are used, and lifelike human behavior in social contexts with reasonable quality are recorded. As part of the release, annotated raw material, the resulting point cloud sequences, and an auxiliary software toolbox to acquire, process, encode, and visualize data suitable for real-time applications, are provided.

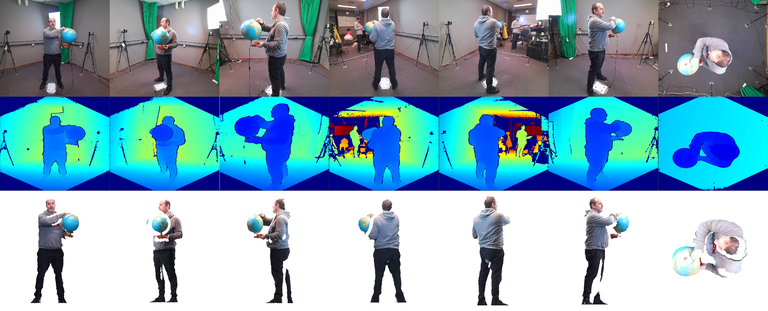

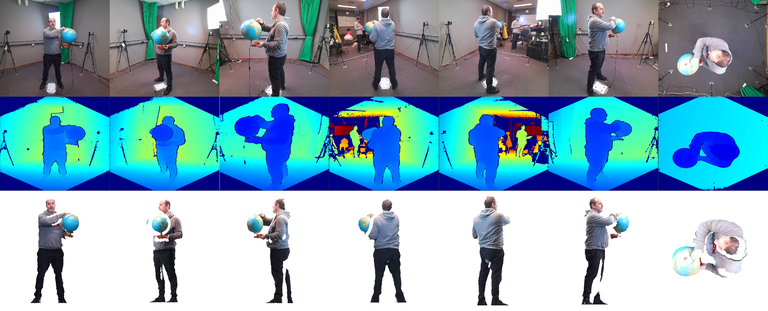

Illustration of RGB (1st row) and Depth (2nd row) raw data captured by our camera arrangement, and corresponding point cloud frames (3rd row) that are generated offline.

Illustration of RGB (1st row) and Depth (2nd row) raw data captured by our camera arrangement, and corresponding point cloud frames (3rd row) that are generated offline.